How can I estimate statistical power for a structural equation model?

This is a question that often arises when using structural equation models in practice, sometimes once a study is completed but more often in the planning phase of a future study. To think about power, we must first consider ways in which we can make errors in hypothesis testing (Cohen, 1992). Briefly, the Type I error rate is the probability of incorrectly rejecting a true null hypothesis; this is the probability that an effect will be found in a sample when there is truly no effect in the population. In contrast, the Type II error rate is the probability of accepting a false null hypothesis; this is the probability that an effect will not be found in a sample when there truly is an effect in the population. Statistical power is one minus the Type II error rate and represents the probability of correctly rejecting a false null hypothesis; this is the probability that an effect will be found in the sample if an effect truly exists in the population. It is important to determine whether a proposed study will have sufficient power to detect an effect if an effect really exists. Although power is quite easy to compute for simple kinds of tests such as a t-test or for a regression parameter, it becomes increasingly complicated to compute power for complex SEMs.

This is a question that often arises when using structural equation models in practice, sometimes once a study is completed but more often in the planning phase of a future study. To think about power, we must first consider ways in which we can make errors in hypothesis testing (Cohen, 1992). Briefly, the Type I error rate is the probability of incorrectly rejecting a true null hypothesis; this is the probability that an effect will be found in a sample when there is truly no effect in the population. In contrast, the Type II error rate is the probability of accepting a false null hypothesis; this is the probability that an effect will not be found in a sample when there truly is an effect in the population. Statistical power is one minus the Type II error rate and represents the probability of correctly rejecting a false null hypothesis; this is the probability that an effect will be found in the sample if an effect truly exists in the population. It is important to determine whether a proposed study will have sufficient power to detect an effect if an effect really exists. Although power is quite easy to compute for simple kinds of tests such as a t-test or for a regression parameter, it becomes increasingly complicated to compute power for complex SEMs.

There are a number of reasons why it is more complex to compute power for an SEM. First, we must clearly articulate for precisely what effect we want to estimate power. In any SEM, there are many types of effects in which we might be interested, some of which might have different effect sizes within the very same model. For example, we might want to compute the power to detect a single parameter (say a treatment effect); or we might instead be interested in the power of a single mediated effect, or several mediated effects; or we might be interested in the power to reject the entire model as a whole. Even once the effects of interest are clearly identified and the associated effect sizes are determined, there are three general approaches to estimating power from which to choose.

The first is based on the methods originally proposed by Satorra and Saris (1985). For this approach, a chi-square difference test is used to determine the power of detecting the omission of one or more specific parameters (although it can be applied to other types of model restrictions as well, such as equality constraints). It is based on the non-centrality parameter which is an estimate of the difference between the central chi-square distribution (that holds when the model is correct) and the non-central chi-square distribution (that holds when the model is incorrect). This method works very well, although one challenge is that all parameters that define the model must be assigned specific numerical values. Further, tests are limited to evaluating constraints (e.g., omitted parameters, equated parameters) and it is difficult to estimate power for effects such as mediated pathways. Finally, the validity of the test rests on the meeting of all underlying assumptions (e.g., sufficient sample size, multivariate normality, linearity, and complete data).

A closely related alternative method for power estimation is based on a Monte Carlo simulation approach (e.g., Muthén & Muthén, 2002). Whereas the chi-square difference test is derived analytically (that is, based on areas under the central and non-central chi-square distribution), the Monte Carlo approach builds an empirical sampling distribution of a given effect based on multiple draws from a simulated distribution. Like the Satorra-Saris approach, the Monte Carlo method also requires that all model parameters be assigned specific numerical values. However, a distinct advantage of this method is that it does not rely on asymptotic assumptions. As such, power can be estimated under conditions such as small sample size, non-normal distributions, or missing data. Further, power can easily be estimated for hypothesized effects that do not necessarily involve restrictions placed on parameters (e.g., indirect effects in mediation models).

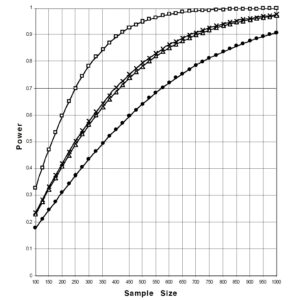

A final option is to compute the power for the omnibus test of the entire model using the approach of MacCallum et al. (1996). An advantage of this approach is that one need not define a specific parameter or hypothesized effect of interest. Instead, this approach focuses on the power of the overall test of model fit. This approach is closely related to both the Satorra-Saris and Monte Carlo methods and can be used to compute the power for what is called “exact fit”; that is, where the null hypothesis states that the hypothesized model is precisely correct in the population. However, the MacCallum approach naturally expands to estimate power for what is called “close fit”; that is, where the null hypothesis states that the hypothesized model is approximately correct in the population. This is accomplished through the use of the RMSEA instead of the likelihood ratio test statistic (as used in Satorra-Saris). Power estimation for tests of close fit is a key strength of this approach, although a potential limitation is that the power is computed at the level of the overall model and does not apply to specific effects of interest (e.g., a hypothesized mediated effect).

In sum, although it can be quite important to consider issues of power when planning a study that uses SEM, it can also be challenging to compute estimates that accurately reflect how the model will be estimated in practice. There are pros and cons to all three of these methods, and these approaches share more similarities than differences. An excellent recent review is presented by Lee and MacCallum (2012).

References

Cohen, J. (1992). A power primer. Psychological Bulletin, 112, 155-159

Lee. T., Cai, L., & MacCallum, R.C. (2012). Power Analysis for tests of structural equation models. In R. Hoyle, D. Kaplan, G. Marcoulides, & S. West (Eds.), Handbook of Structural Equation Modeling (pp.181-194), New York: Guilford.

MacCallum, R.C., Browne, M.W., & Sugawara, H.M. (1996). Power analysis and determination of sample size for covariance structure modeling. Psychological Methods, 1, 130-149.

MacCallum, R.C., Lee, T., & Browne, M.W. (2010). The issue of isopower in power analysis for tests of structural equation models. Structural Equation Modeling, 17, 23-41.

Muthén, B.O., & Curran, P.J. (1997). General longitudinal modeling of individual differences in experimental designs: A latent variable framework for analysis and power estimation. Psychological Methods, 2, 371–402.

Muthén, L.K., & Muthén, B.O. (2002). How to use a Monte Carlo study to decide on sample size and determine power. Structural Equation Modeling, 9, 599-620.

Satorra, A., & Saris, W. E. (1985). Power of the likelihood ratio test in covariance structure analysis. Psychometrika, 50, 83-90.